ashleysally00

Knowledge Share Blog

Recent Posts

Something I Learned About Python This Week

Something I Learned About Python This Week

I started building with Python by learning from online tutorials and personal projects. But my learning had gaps. Recently, while automating a script, I started to notice something I hadn’t realized about Python before: it’s not just object-oriented. Then, I looked it up!

If, like me, you learned Python mostly through tutorials and projects, you might have noticed that Python shares loops and other features with JavaScript. Why is this? Most programming languages need ways to repeat actions, like using loops, to make code more efficient. But here’s what I didn’t know—loops aren’t only part of object-oriented programming (OOP). They’re also used in procedural programming. Python isn’t just object-oriented—it can be procedural too.

What is Procedural Programming?

Procedural programming is a style where you write step-by-step instructions, using functions, variables, and loops to control the flow. It’s like following a recipe: do this, then that, in order. You can think of it as breaking down a task into a series of steps, where each step is clearly defined.

In Python, you can use procedural programming when you:

- Write scripts that process data (like cleaning and transforming datasets).

- Automate simple tasks, such as renaming files or sending emails.

- Run machine learning or AI pipelines step-by-step, where each stage follows the previous one.

Example of Procedural Python

import csv

# Define the function to load CSV

def load_csv(filename):

rows = []

with open(filename, mode='r') as file:

csv_reader = csv.reader(file)

for row in csv_reader:

rows.append(row)

return rows

# Example usage

filename = 'your_file.csv'

data = load_csv(filename)

print(data)

This is a basic example where we load a CSV file step by step: open the file, read it row by row, and store the data in a list. It’s simple and works great for smaller tasks that don’t require complex structures. I had done this one a lot! But i had not thought about what it meant.

But You’ve Probably Also Used Python as OOP

Python also supports Object-Oriented Programming (OOP), which is another way to write Python code. OOP organizes code into classes and objects. Each object can have attributes (data) and methods (functions) that operate on that data. You use OOP when you want to represent real-world entities or concepts that have attributes and behaviors.

Example of OOP in Python

class Greeter:

def __init__(self, name):

self.name = name

def greet(self):

print(f"Hello, {self.name}!")

greeter = Greeter("Ashley")

greeter.greet()

This is an example of Python’s OOP capabilities. The Greeter class encapsulates a name attribute and a method to print a greeting. When you create an instance of Greeter, you are defining a particular greeting behavior that you can reuse.

Why Do We Hear So Much About Python as OOP and Not Procedural?

While Python can be used for both procedural and object-oriented programming, OOP is often emphasized in courses and tutorials because it helps structure larger, more complex projects. As projects grow, OOP becomes more useful because it allows you to break the project into manageable components (like classes for different parts of your app), which makes the code more modular and reusable.

However, procedural programming is still widely used, especially in smaller scripts, data manipulation tasks, and machine learning workflows. When working with data preprocessing or running a machine learning pipeline step-by-step, procedural Python is often the simpler and more efficient approach.

When to Use Procedural vs. OOP in Python

Use Procedural Programming When:

- You have simple scripts that need to perform linear tasks (e.g., file manipulation, simple data cleaning).

- You’re building quick automation tasks, such as renaming files or sending emails.

- You’re working with data processing pipelines, like reading and transforming CSV files, without needing to manage complex entities.

Use OOP When:

- You need to model real-world entities or complex systems, such as a banking system with customers, accounts, and transactions.

- You want code reuse and modularity—where multiple components (e.g., user management, authentication) interact but remain separate and manageable.

- Your application needs extensibility and the ability to add new features easily, such as adding new shapes to a graphics editor.

Summary

Python’s versatility allows you to use it in both procedural and object-oriented ways. Procedural programming is great for small, linear tasks like automating file management or cleaning data. On the other hand, OOP shines when you need to model more complex systems or when you need to maintain, extend, and reuse your codebase as your projects grow.

By understanding both approaches, you can choose the right one based on the complexity of the task at hand. Whether you’re writing a quick script or building a large application, Python has the tools to help you do it efficiently!

Have you used Python procedurally or with OOP? Share your experiences in the comments!

Note: I am now going to try enabling comments in my GitHub blog using Utterances! I am attempting to make this into a standard blog format, little by little! Thanks

My First Kaggle Competition: Gen AI Intensive Course Capstone 2025Q1 – Learning About Agents and the Expanding Capabilities of Generative AI

My First Kaggle Competition: Gen AI Intensive Course Capstone 2025Q1 – Learning About Agents and the Expanding Capabilities of Generative AI

One of the most powerful uses of machine learning is its ability to analyze and interpret real-world data, like audio, to improve safety and decision-making. For my Gen AI Intensive Course Capstone 2025Q1 project, I developed SoundGuard. It’s an emergency sound detection system that utilizes generative AI to identify urgent audio signals and then trigger appropriate responses.

How the Project Started

The Gen AI Intensive Course by Google showcased the multimodal capabilities of generative AI. It can now interpret many types of data, including text, images, and audio. Knowing this, I wanted to develop a tool to interpret audio. I also hoped to find a way to apply that to real-world use cases.

How It Works

SoundGuard uses machine learning to classify emergency sounds. By processing audio, it can detect sounds like glass breaking or siren alarms. Once a sound is identified, a chatbot responds with natural language instructions, helping the user assess the situation and take action. The system is designed to be calm and reassuring in emergencies.

For the project, I used the ESC-50 dataset to train the model, and integrated it with Hugging Face and Google Cloud Storage for seamless real-time interaction.

Gen AI Capabilities & Requirements

The Gen AI Intensive Course Capstone 2025Q1 project required the use of at least three Gen AI capabilities. Here are the capabilities listed in the competition:

- Structured output/JSON mode/controlled generation

- Few-shot prompting

- Document understanding

- Image understanding

- Video understanding

- Audio understanding

- Function calling

- Agents

- Long context window

- Context caching

- Gen AI evaluation

- Grounding

- Embeddings

- Retrieval-augmented generation (RAG)

- Vector search/vector store/vector database

- MLOps (with GenAI)

For SoundGuard, I focused on three core capabilities to meet the project’s requirements:

- Audio Understanding: Using YAMNet and the ESC-50 dataset, SoundGuard classifies emergency sounds like glass breaking and sirens in real time. This allows the system to recognize audio and trigger the appropriate responses.

- Few-Shot Prompting: The chatbot in SoundGuard leverages few-shot prompting to generate actionable, natural language instructions based on the audio classification. When an emergency sound is detected, the chatbot quickly delivers instructions, like suggesting a course of action in response to a siren or glass breaking.

- Function Calling / Agents: The chatbot agent guides users through the necessary steps based on the detected audio. For example, if it detects glass breaking, the agent can suggest that users check for potential break-ins, providing helpful and calm instructions.

Dataset Choice: ESC-50

We chose the ESC-50 dataset, which is a collection of environmental audio clips. This includes sounds of sirens, glass breaking, and alarms. It includes both audio classifications and labels for environmental sounds. These labels help the model to recognize different emergency events.

Model Choice: YAMNet

We decided to use YAMNet, a pretrained model developed by Google. The model is available on TensorFlow Hub. YAMNet was trained on AudioSet, a large dataset with millions of YouTube videos. It can classify audio into 521 different categories. Its lightweight, fast, and efficient design makes YAMNet ideal for real-time audio classification. This is crucial because real-time audio classification must be fast.

Challenges Faced

Despite its capabilities, YAMNet presented some challenges. It was trained on YouTube audio clips, which can vary in quality and background noise. The ESC-50 dataset's clean, short recordings differed from YouTube's noisy, longer clips. This lead to issues with being able to classify certain sounds. Glass breaking was sometimes classified as silence, or sirens as irrelevant noises.

Solution: Confidence Thresholding and Fallback

To address these issues, we implemented a confidence thresholding system. YAMNet often produced low-confidence scores for correct classifications. We ran code several times. Sometimes the scores were as low as 0.01 for accurate predictions.

When the model’s confidence was below 0.02, we applied a fallback mechanism to ESC-50's ground truth labels. This ensured that emergency sounds like sirens and glass breaking were reliably flagged. This helped SoundGuard to excel, even when YAMNet struggled to identify edge cases.

Frontend Interface: Gradio and Hugging Face

We wanted to create a working interface where users could interact with the chatbot. We knew that first we needed to upload the audio files to storage. That way, they could be accessed and streamed in real time. We decided to use Google Cloud Storage (GCS) to host the audio files from the ESC-50 dataset.

Storing Audio Files in Google Cloud Storage

The ESC-50 dataset consists of 2,000 audio recordings, each 5 seconds long, covering 50 different sound events.

We focused on a subset of the dataset containing emergency-related sounds. This included sirens, glass breaking, and alarms. So, we filtered the dataset to only include these relevant categories. Then we exported the audio file metadata into a CSV file, which included the URLs for each file in the subset. We then created a GCS bucket to store these files. We uploaded each file separately and linked to their corresponding URLs. This ensured easy access for the live demo.

Building the Demo with Gradio on Hugging Face

With the audio files hosted on GCS, we turned our attention to the frontend of the project. We wanted to create a simple, user-friendly interface. We decided to make a Hugging Face space and chose their Gradio chatbot template. This interface allowed users to interact with the SoundGuard chatbot. It's a simple real-time demo where users can listen to classified emergency sounds. Then they receive actionable, calming instructions from the chatbot.

Integration with GitHub

We pushed the Kaggle notebook to GitHub. This way, we could store and manage the codebase for the entire project in a GitHub repo. Next, we integrated Hugging Face Spaces with GitHub. This links the repository to the demo for real-time functionality. That means Github can manage the backend logic. The backend logic is important. Here, it includes sound classification and the chatbot's responses. New changes in GitHub are automatically reflected in the Hugging Face space. This keeps the chatbot updated both now and in the future.

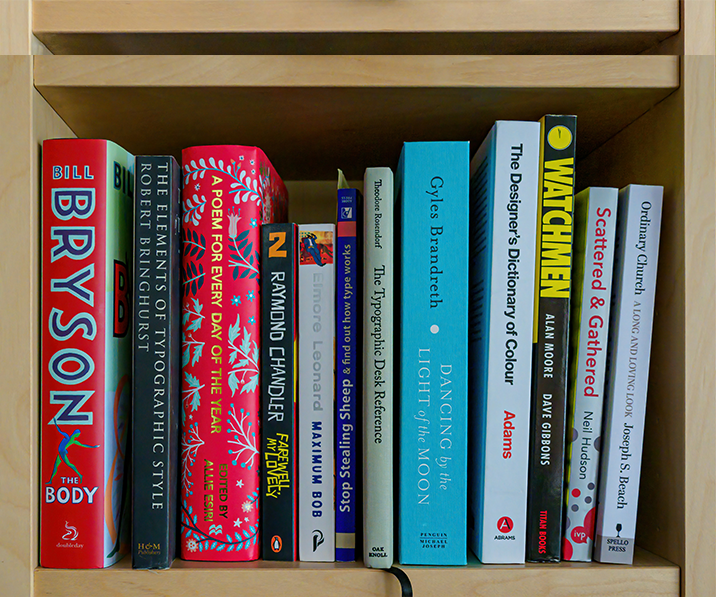

Turn Bookshelf Photos into Structured Data with Gemini 1.5 Pro

Extracting Book Data from Images with Gemini 1.5 Pro

This screencast demonstrates how to use Google AI Studio and Gemini 1.5 Pro to extract structured book data from bookshelf photos. By leveraging OCR (Optical Character Recognition) and AI-powered data extraction, you can convert book titles and authors into structured JSON format, then transform it into CSV for easy spreadsheet use. You can then apply this same idea to other things.

Watch the Screencast

I recorded a screencast demonstrating how to extract structured book data from images using Gemini 1.5 Pro.

Images Used in This Project

The following images by Brett Jordan, Unsplash were used in the experiment:

How I Used Google AI Studio for OCR and Structured Data Extraction

Step 1: Upload an Image

I uploaded a photo of a bookshelf to Google AI Studio.

Step 2: Enter the Prompt

I used the following prompt to guide Gemini 1.5 Pro in extracting book details:

You are an expert data extractor and librarian. Your task is to analyze images of bookshelves and extract information about the books.

- Text Extraction (OCR): Carefully examine the image and use optical character recognition (OCR) to identify and extract the book titles and authors.

- Subject Identification (Attempt): Based on the extracted text, attempt to infer the subject or genre of each book. This might involve looking for keywords in the title or author’s name. If you cannot confidently determine the subject, leave it as “Unknown”.

- JSON Output: Return the extracted data in a JSON format. Each book should be represented as a separate JSON object within an array. The JSON object should have the following keys: “title”, “author”, and “subject”.

Example Output Format:

[

{

"title": "The Lord of the Rings",

"author": "J.R.R. Tolkien",

"subject": "Fantasy"

},

{

"title": "Pride and Prejudice",

"author": "Jane Austen",

"subject": "Classic Literature"

},

{

"title": "A Brief History of Time",

"author": "Stephen Hawking",

"subject": "Physics"

},

{

"title": "Some Book with a title that does not give away the subject",

"author": "Some Author",

"subject": "Unknown"

}

]

Please analyze the provided image and generate the JSON output.

Step 3: Review the JSON Output

Gemini 1.5 Pro processed the image and returned structured JSON data:

[

{

"title": "Meat",

"author": "Pat LaFrieda",

"subject": "Cooking"

},

{

"title": "Simply Beautiful Photographs",

"author": "Griffiths",

"subject": "Photography"

},

{

"title": "Simple",

"author": "Diana Henry",

"subject": "Cooking"

},

{

"title": "Salt is Essential",

"author": "Shaun Hill",

"subject": "Cooking"

},

{

"title": "gather",

"author": "Gill Meller",

"subject": "Cooking"

},

{

"title": "What to Cook & How to Cook it",

"author": "Jane Hornby",

"subject": "Cooking"

},

{

"title": "Rick Stein's India",

"author": "Rick Stein",

"subject": "Cooking, Travel"

},

{

"title": "The Great British Bake Off",

"author": "Linda Collister",

"subject": "Cooking"

},

{

"title": "Perfect Cakes & Bakes to Make at Home",

"author": "Linda Collister",

"subject": "Cooking"

},

{

"title": "Recharge Your Design Batteries",

"author": "John Norris",

"subject": "Design"

},

{

"title": "The Complete Guide to Stretching",

"author": "Christopher M. Norris",

"subject": "Health, Fitness"

},

{

"title": "I'm Not Your Friend, I'm Free",

"author": "Sam McBratney, Kim Lewis",

"subject": "Unknown"

},

{

"title": "When from Death",

"author": "John Rawson",

"subject": "Unknown"

},

{

"title": "An Illustrated Life",

"author": "Danny Gregory",

"subject": "Art, Inspiration"

}

]

Step 4: Convert JSON to CSV Once I had the JSON data, I converted it into a CSV file for use in spreadsheets. Here’s the Python script I used:

import pandas as pd

# Load the JSON file into a DataFrame

df = pd.read_json('books.json')

# Save as CSV

df.to_csv('books.csv', index=False)

print("books.json has been successfully converted to books.csv")

Now, I could open books.csv in Google Sheets or Excel for further analysis.

Applying This Process to Other Real-World Use Cases This method of OCR and structured data extraction can be extended beyond books. Here are some real-life applications:

-

Inventory Management Retailers and warehouses can take shelf photos to automatically extract product names, brands, and barcodes, reducing manual data entry.

-

Logistics & Package Tracking Shipping companies can scan package labels and extract tracking numbers, recipient names, and addresses into a structured format.

-

Healthcare & Prescription Management

Pharmacists can digitize prescription labels, extracting patient names, medications, and dosage instructions for better record-keeping. Conclusion Using Google AI Studio and Gemini 1.5 Pro, you can:

Extract structured data from images using OCR.

Convert JSON to CSV for easier spreadsheet use. Apply this method to real-world applications in retail, logistics, and healthcare. This is just one example of how AI can automate tedious tasks and improve efficiency in data processing. AI is changing every day…

Next Steps

Try extracting structured data using Google AI Studio.

Convert the JSON output into CSV for analysis.

Think about how this method can be applied to other industries.🧠

Let me know if you try it, or if you figure something else out!! ⭐

March 17, 2025: Google Cloud Day 2

Today I learned more about Google Cloud:

March 16, 2025: Google Cloud

I learned this today at Google Cloud: